Join Mago now!

Secure a spot on our waitlist.

What you’ll learn in this article:

Raw footage is great and all. But if you’re publishing video content or processing video elements in 2025 (for social, branded campaigns, or just storytelling content), what matters is HOW you process the visual layers underneath.

Not just editing cuts, doing color tweaks, or layering sound effects. But full-spectrum processing: Motion, texture, lighting, segmentation, style transfers, so on.

This is what separates forgettable content from scroll-stopping videos that get a lot of shares and engagement.

On platforms where motion = attention, the most dynamic, stylized visuals win. Whether you’re a video editor, a motion designer, or a 3D animator artist, the ability to break down and reconstruct video elements is now part of the job.

Which is why we’re going to be covering everything you need to know about video production and processing video elements with AI below.

At its core, processing video elements means isolating and manipulating individual parts of a video. Like the movement, lighting, or subject detail, to reshape the final look and feel.

It’s not just editing timelines or applying LUTs. It’s about unlocking access to the layers beneath the footage.

For example, take a simple talking-head clip. With the right tools, you can:

From pre production planning to post production stylization, a huge part of the creative lift happens after the footage is captured. During post production process and stylization. For more info on that, see our guide on how to optimize your video production process

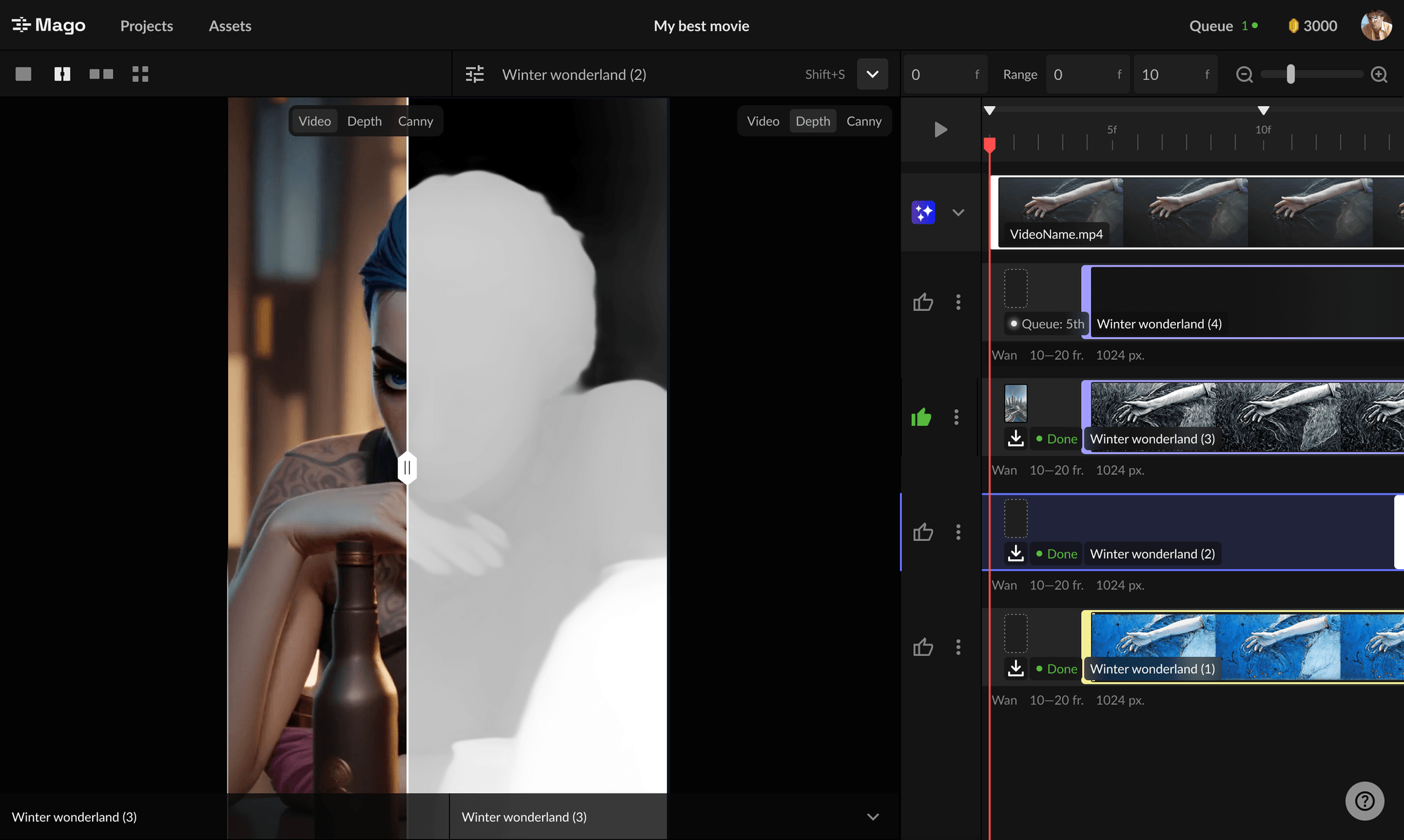

This is where ControlNets come in.

A ControlNet is a method of guiding generative video outputs using reference data. Like human pose, object edges, depth maps, or segmented masks. Essentially, this lets you control what the AI keeps and what it transforms.

So instead of generating wild, random outputs, you can:

This is what makes ControlNets powerful. They give you a frame-by-frame structure. And let the AI do the creative work on top, without breaking the original composition.

With video production, AI now gives creators real control over how their video elements behave frame-by-frame. If you’re looking to explore your tooling options, here’s a breakdown of the best video-to-video AI platforms to check out.

Now, from music videos to social media content, here’s how people are using these tools right now.

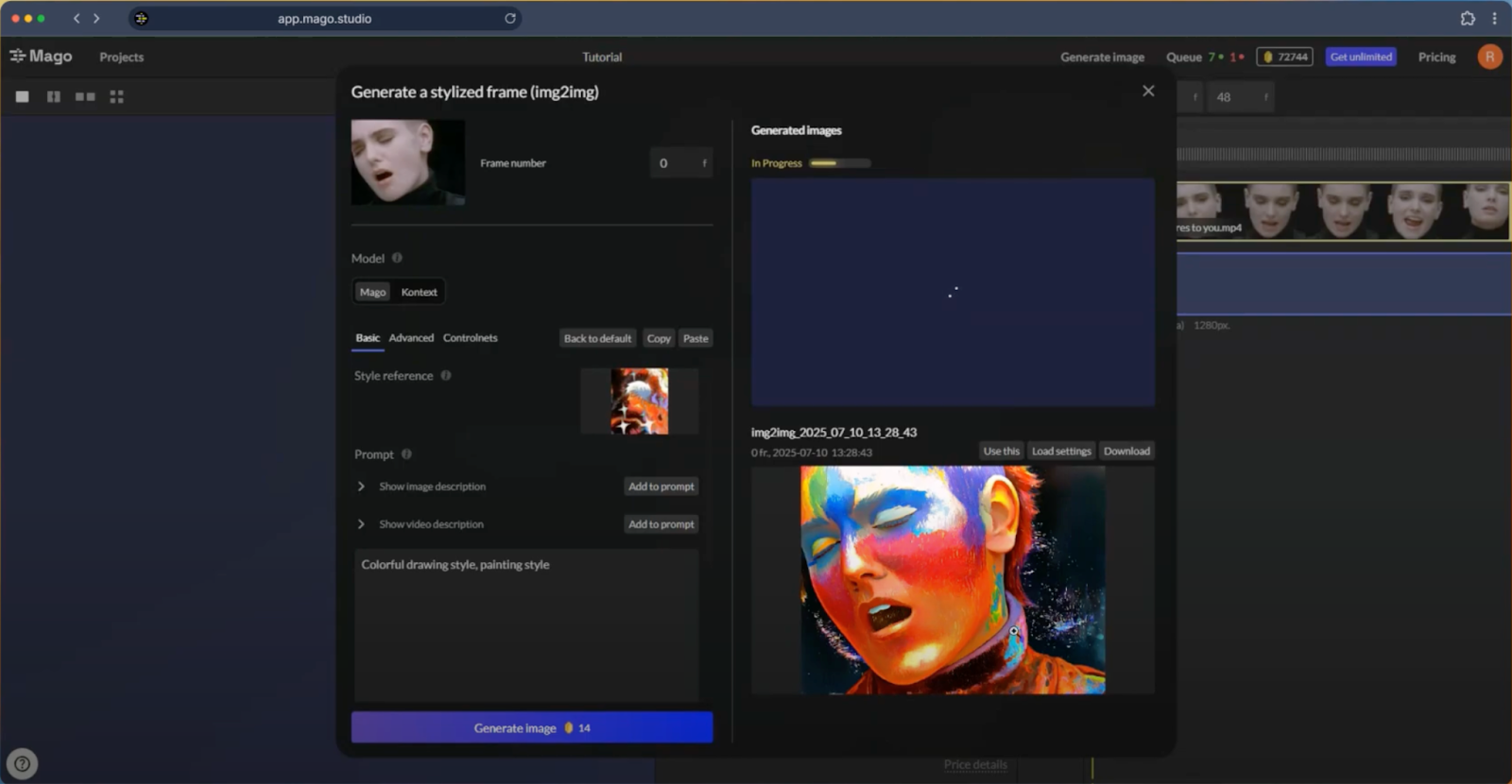

Artists and directors upload raw band or dance footage, then use ControlNets to preserve motion and proportions. All while re-rendering the visuals in anime, watercolor, or sketch styles.

One clip can become five different aesthetics for TikTok, Shorts, Reels, and YouTube. With tools like Mago, creators can remix the same footage across formats - building a consistent visual brand while experimenting with new looks, fast.

Dancers shoot once and swap out outfits, characters, or lighting styles later using segmentation masks and pose control. Movements stay intact, but the look feels fresh across versions.

No need to re-shoot or re-rig.

Instead of hiring VFX artists or video to video tool 3D artists, editors now layer motion trails, lighting bursts, or texture overlays using AI-driven masks. Great for indie filmmakers or creators with tight budgets.

For long-form explainers, creators can lock in the presenter’s position and re-style only the background or object layers. Keeping attention on the subject while still making the visuals scroll-worthy.

During post production, AI lets marketers or editors break down raw footage into modular elements. Then adapt each for TikTok, Reels, Shorts, or YouTube with new themes, effects, or visual filters. This way, one base clip transforms into multiple platform-native versions.

The old workflow, capture footage, do a basic edit, ship it, doesn’t cut it anymore.

Now, processing video elements is about modular creativity.

If you're working in video production today (especially for social media, branded content, or fast-paced campaigns), then processing video elements is part of the job. You’re often stepping into the role of a video to video editor. Using AI to reconstruct, stylize, and modularize footage on the fly.

This goes beyond “regular” video editing.

For smaller teams, freelancers, and in-house creators, this means generating high-quality video content without relying on traditional VFX pipelines. All from a single source clip.

This is what sets apart standout video production and processing in 2025. Not just speed or polish. But depth of control.

Practically speaking, you don’t need a Hollywood pipeline to get started. Here’s how creators are already doing it with just one clip and the right set of tools.

Start with any performance, talking-head, or b-roll footage. It doesn’t need to be perfect. What matters is movement, composition, and subject clarity. The AI will handle the rest during post-production.

Define where the content’s going and how it should feel.

Think: “anime remix for TikTok,” “sketch-style explainer for LinkedIn,” or “cinematic b-roll for Reels.” The clearer the style, the better the output.

Platforms like Mago handle the heavy lifting.

Once uploaded, you can use ControlNets such as real-time depth map of your videos or real-time movement detection to preserve choreography, and stylize only the layers you want to change.

For more info, see our YouTube guide on restyling your videos with Mago.

Want to keep the dancer’s movement but turn them into a 2D character?

Or re-style the background while keeping the presenter crisp? Use depth maps, pose control, or segmentation masks to keep structure and tweak the rest.

Render your stylized video, then duplicate and remix it into multiple platform-specific edits. A/B test formats, swap looks, or use the same footage to create a full campaign’s worth of content.

So, to recap, the edge in video production comes from what happens AFTER the shoot. When you break footage down, rework layers, and reimagine the final results.

The teams and creators winning on social media aren’t necessarily the ones with the biggest cameras or budgets. They’re the ones who know how to break down footage into modular layers. And reshape it for different platforms, formats, and aesthetics.

With tools like Mago, that level of control is no longer reserved for VFX studios. It’s something you can tap into today. Without needing a green screen, animation team, or a massive post-production pipeline.

Want to try it?

Upload your first clip, remix the visual style, and start building high-quality, stylized content that actually stands out.

Join the Mago closed beta now!

Secure a spot on our waitlist.